Under the Hood: A Deep Dive into How Our AI Apps Are Made

27.12.2025

We’re Not a Corporation — We’re a Crew of Builders

We’re a small, agile team of product founders, AI researchers, mobile and backend developers, and creative minds on a mission to build truly useful apps powered by real AI.

We’re not building clickbait tools or VC-fueled promises — we’re building functional, real-life products that help users get healthier, smarter, and more creative every day.

On the product side, we have founders with deep experience in growth, UX, and go-to-market strategy. On the tech side, our crew includes:

Machine learning engineers & data scientists

Mobile developers (Swift, Kotlin, React Native)

Full-stack and backend engineers (Python, FastAPI, PostgreSQL)

DevOps & QA specialists ensuring stability and speed

We integrate direct access to top AI APIs like OpenAI’s GPT, and we’re not afraid to go deeper. For more complex logic and context-aware intelligence, we use:

LangChain / LangGraph for multi-step workflows

Vector databases for semantic memory & personalization

Real-time APIs, local device data (e.g., Apple Health), and live user feedback

We’re building not just apps — we’re creating an interconnected AI ecosystem for health, education, and creativity.

Catademy — Smart Education, Built on AI

Catademy isn’t just another homework helper — it’s an AI-powered learning companion that helps students write essays, check grammar, solve multi-choice questions, and more.

Under the hood, Catademy integrates OpenAI’s ChatGPT, fine-tuned prompts, and advanced models like DeepSeek for complex reasoning tasks. These models make it easy to spin up useful features quickly — but personalization is the real challenge. Generic answers are simple; adaptive, student-specific feedback requires smart prompt engineering, context retention, and a balance between speed and cost.

We’re constantly iterating to make the system not only intelligent, but relevant — learning how each student writes, how they improve, and what support they really need.

This is what makes Catademy different: not just “AI that answers,” but AI that adapts.

Rhymz — Creating Music in the Age of AI

The future of music is no longer locked behind expensive studios or hard-to-learn tools. With Rhymz, anyone can turn ideas into songs — from lyrics and melodies to voices, covers, and even AI-generated music videos.

We’re building Rhymz on top of powerful AI models and APIs that are already reshaping the music industry. Giants like Suno have proven what’s possible — with voice cloning, text-to-melody tools, and genre templates now accessible via API, the barriers to entry are gone.

But here’s the real challenge: everyone has access to the same models. The difference lies in:

Prompt design: how creatively and effectively you guide the AI

Voice fine-tuning: building recognizable, unique voices

Infrastructure: optimizing quality, speed, and costs

User experience: turning tech into magic for the end user

Rhymz is designed not just for output — but for creative control. You choose the genre. You write or generate the lyrics. You select your voice (or upload your own). And with one tap, the app brings it all to life.

Music is becoming programmable — and Rhymz is your personal studio, powered by AI.

MYSLF — A Smarter Backbone with RAG, LangChain & Vector Intelligence

To deliver truly personalized fitness and wellness experiences, MYSLF goes far beyond basic app logic or simple AI chat integrations. We’ve built MYSLF on a modular intelligence layer that blends real-time data, long-term memory, and personalized AI reasoning.

Here’s how it works:

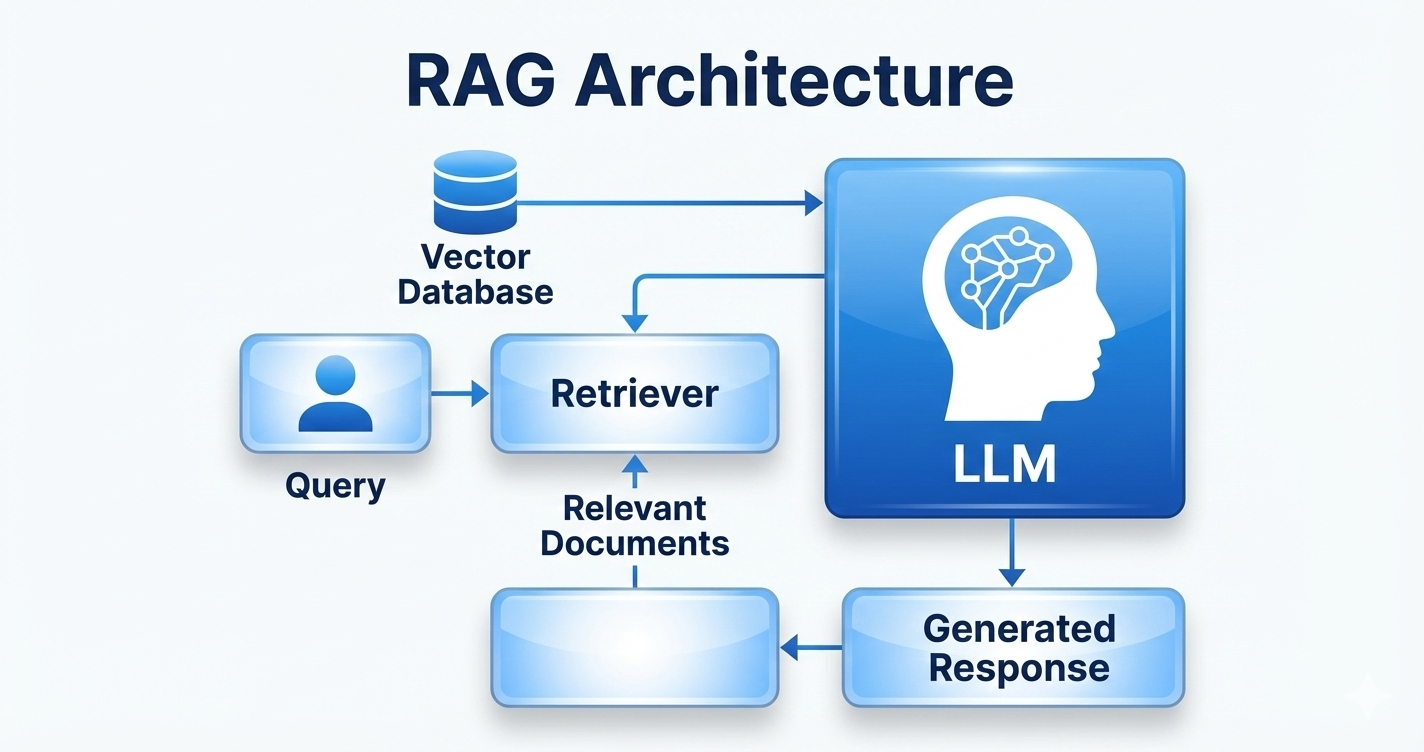

📚 RAG (Retrieval-Augmented Generation)

We use RAG pipelines to combine large language models (LLMs) with live data. This allows our AI trainers to:

Recall your training history

Analyze your recent activity

Adjust feedback and tone based on your long-term goals

Instead of generating responses from scratch, MYSLF retrieves relevant personal context from your data — ensuring accuracy, continuity, and empathy.

LangChain / LangGraph

Our AI coaching system is orchestrated using LangChain and LangGraph — frameworks built to handle multi-step reasoning, tool calling, and conversational workflows. This means:

Your AI coach doesn’t just chat — it thinks

It makes decisions based on multiple data sources

It can schedule, follow up, adapt workouts dynamically

Vector Databases (Pinecone / Weaviate)

We store your interactions, habits, preferences, and workout performance in vector databases, enabling:

Fast semantic search (e.g. “what kind of exercises do I hate on Mondays?”)

Contextual recommendations based on past sessions

Personal memory that evolves with your fitness journey

Real-Time Feedback Loops

MYSLF constantly ingests:

Health data (Apple Health, Google Fit)

Your feedback after sessions

AI-inferred behavioral markers

All of this feeds into a live, adaptive workout plan — rebalanced daily using real metrics, not assumptions.

With MYSLF Apps, users:

Get smarter plans, based on their data — not someone else’s routine

Stay on track, with real-time feedback loops and intelligent nudges

Feel understood — because the AI listens, learns, and evolves with them

The outcome?

Higher engagement. Better retention. Healthier habits. A community of users who feel guided — not overwhelmed.

It’s not just about automation. It’s about building trust between humans and helpful AI — one workout, one essay, one track at a time.